Latent Physionotrace

Mechanical Installation, Generative AI Algorithms, Real-Time Animation, Mixed Media

Dimensions Variable

2025

潛像描繪儀

機械裝置、機器繪圖、演算法、生成動畫、複合媒材

依場地而定

2025

︎ Magic Noise: How AI Wanders in the Latent Space, LIN & LIN Gallery

︎ 神奇的雜訊:AI的潛在漫步方式,大未來林舍畫廊

![]()

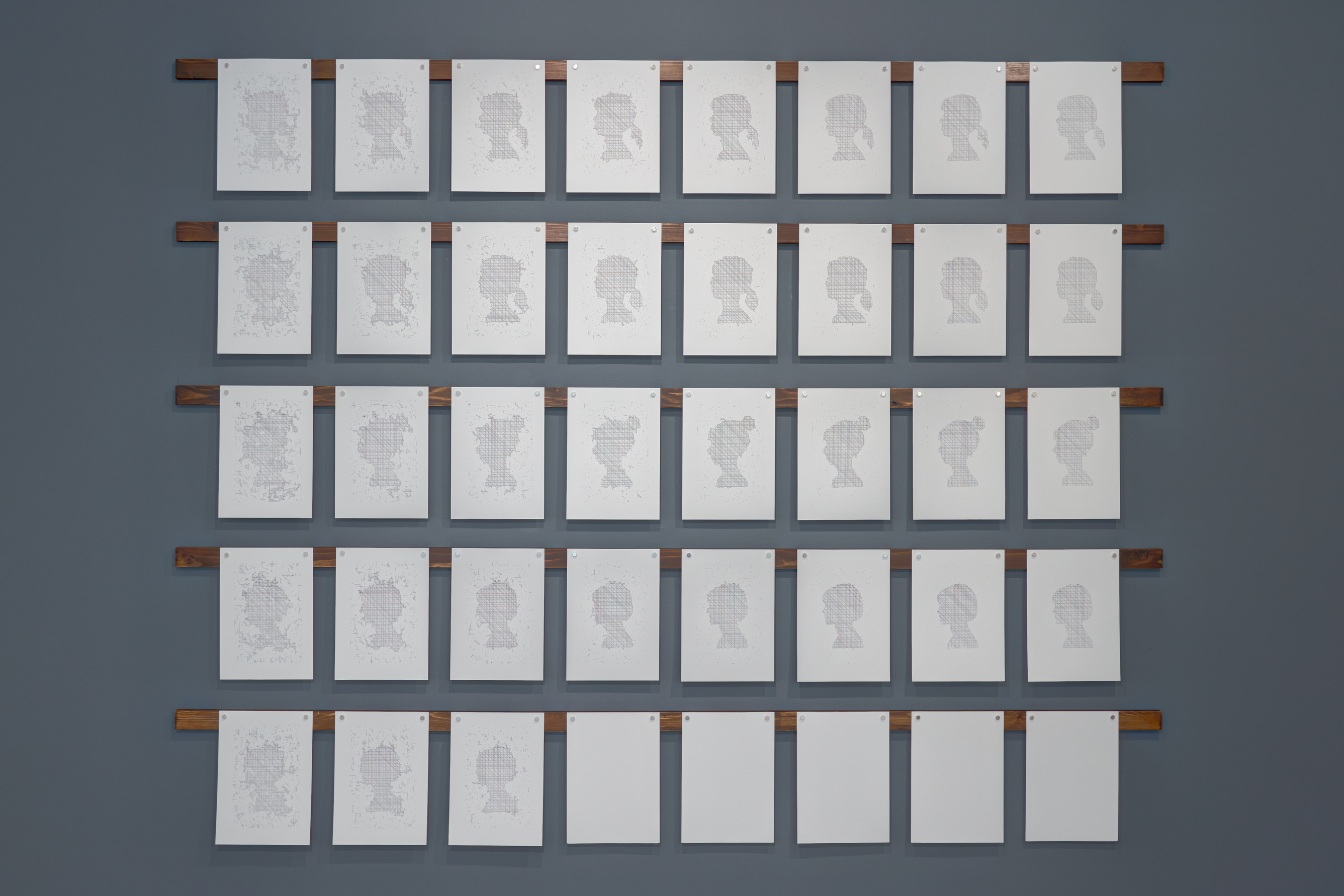

展場入口處為《潛像描繪儀》畫出的肖像畫

In recent years, the Diffusion Model has become the state of the art (SOTA) in image generation systems. It improves upon earlier “one-step” generative methods by dividing the process into a series of smaller steps. At each step, instead of directly using the model’s computed output, the algorithm randomly selects a nearby result within a certain range. Both experimental data and visual outcomes demonstrate that this modification has brought about a major breakthrough. A similar design principle is also found in large language models.

The generated output exists as a vector in latent space—essentially, a coordinate. Randomly selecting another coordinate nearby corresponds to diffusing outward from the original point with a Gaussian probability distribution: the closer a point is, the higher its probability; the farther it is, the lower. This process, known as adding noise, gives the diffusion model its name.

In visualizations of models like Stable Diffusion or Midjourney, the process is often represented as “denoising.” However, the true logic that allows AI to approach something resembling creativity lies precisely in the step of adding noise. This is the main reason—and necessity—behind why generative AI produces different results each time. One can imagine this process as AI taking a wandering exploration through latent space, step by step, during its generative journey.

This work consists of three installations: a Galton board, an AI image generation algorithm, and a Physionotrace.

近幾年成為影像生成模型SOTA(State of The Art,意指某特定時刻下,某技術、設備或科學領域所達到的最高發展水平或最高級狀態)的Diffusion Model(擴散模型),改良了過去「一步到位」的生成方式,把生成的過程拆成了若干個小step,並且在每一個步驟的運算中,並不直接採用模型運算出來的結果,而是在該結果的「附近」具有隨機性地選擇另一個結果。不論是實驗數據或是肉眼可見的結果,皆表明此改良取得躍升性突破。不僅是影像生成模型,大語言模型中也有類似的設計。

生成出來的結果是一個「潛空間」(Latent Space)的「向量」(可以理解成座標)。在「附近」隨機選擇另一個座標,則為從原本的座標以高斯機率分布「擴散」出去,離原本結果越近機率越高,越遠越小,這個步驟就是所謂的「加雜訊」,也就是「擴散模型」的命名由來。

在stable diffution或是midjourney生成過程的視覺化中,多半是以「降噪」的方式呈現,然而真正讓AI有可能可以往「創造力」討論的演算邏輯,其實「加入雜訊」的這個步驟,這也是生成式AI「為甚麼」且「必須」每次生成結果不一樣的主要原因。可以把這個步驟,想像成AI在一步一步的生成step中,在潛空間裡的漫步與探索。

本作品由三組裝置組成:道爾頓板、AI影像生成演算法、側面描繪儀(Physionotrace)。

![]()

![]()

![]()

![]() 道爾頓板動力裝置,產生常態分布

道爾頓板動力裝置,產生常態分布

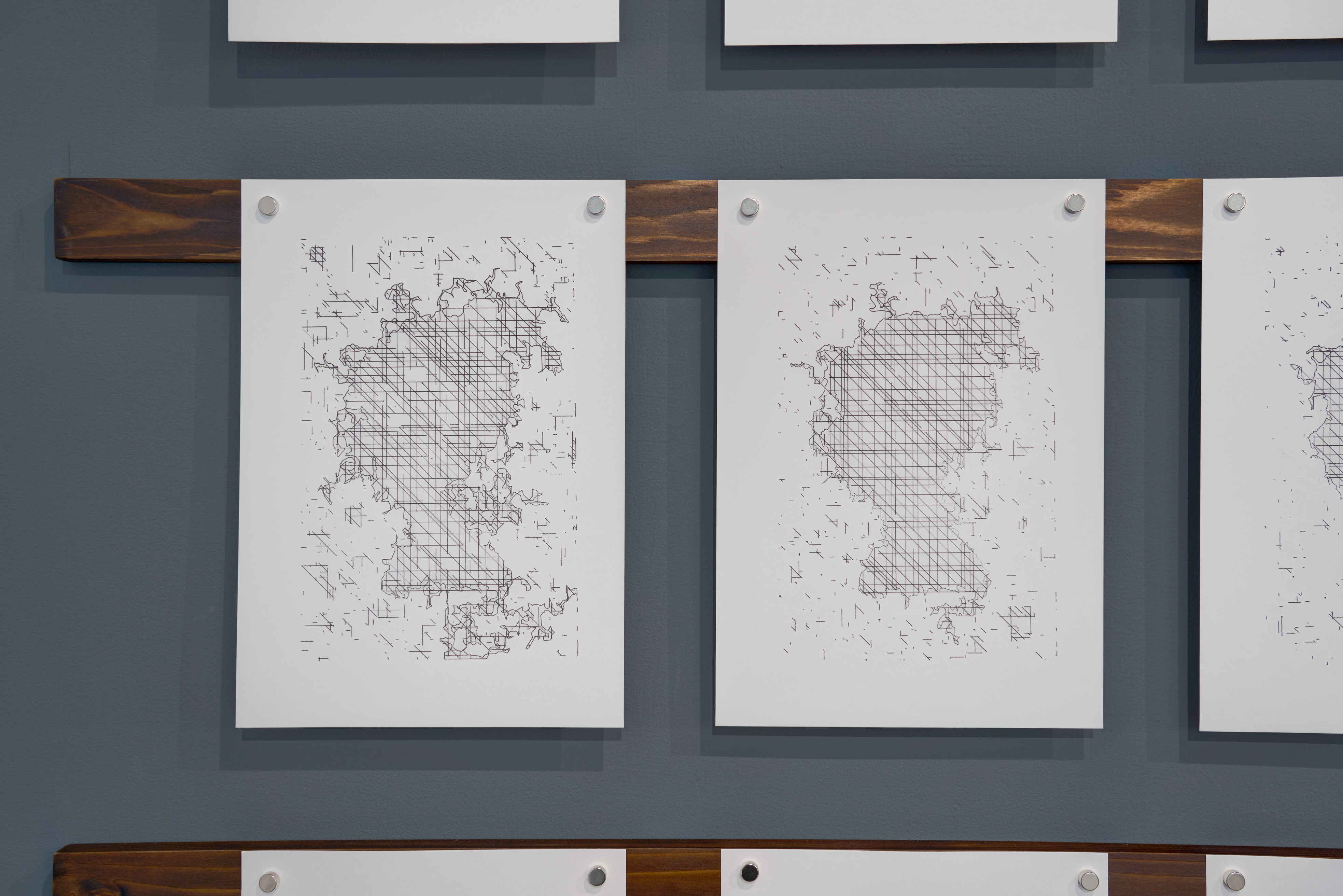

The work begins with a Galton board, which generates a Gaussian distribution—a type of random distribution originating from real-world physical behavior. This naturally occurring randomness is then used to calculate the Gaussian noise required in the iterative process of an AI image generation model (Diffusion Model). The model follows this data to navigate through latent space, producing half-length profile portraits.

作品首先透過道爾頓板,產生來自真實物理行為的高斯分布(Gaussian Distribution,也就是常態分布)——這是一種源自現實世界的隨機分布。並依此計算出AI影像生成模型(Diffusion Model)在生成的疊代過程中所需的高斯雜訊,引導AI在潛空間(Latent Space)中進行探索,生成半身側面剪影的肖像畫。

![]()

![]()

生成半身側面剪影的肖像畫

These AI-generated portraits are displayed through a modified Physionotrace, a device originally invented in the late 18th century to trace human silhouettes. In this work, the tracing paper is replaced by an LCD screen stripped of its backlight and polarizing film, while the traditional light source for casting shadows is replaced by the screen’s backlight—a connection to the virtual world. The tracing hand is no longer human but mechanical, using a linkage mechanism to reproduce the AI-generated portraits onto real paper. Each day, the machine draws eight profile silhouettes, moving gradually from chaos to clarity.

這些AI生成的肖像,顯示在一台改造的側面描繪儀上。側面描繪儀原為18世紀末用來描繪人像輪廓的裝置,我們將描圖紙替換成移除了背光板與偏光膜的液晶螢幕,並把傳統用來打出剪影的光源,替換成連結虛擬世界的「螢幕背光」。描圖的手也不再是人手,而是機械手臂,透過連桿機構,把螢幕上的AI肖像描繪到實體紙上。每天這台機器會從雜亂至清晰,依序繪製出8張側面肖像剪影。

![]()

![]()

![]()

![]()

《潛像描繪儀》繪置側面肖像剪影

By using noise derived from the physical world instead of the computer’s pseudo-random number tables traditionally used in AI, the work establishes a link between the virtual latent space in which AI operates and the tangible physical world humans inhabit. It extends the historical significance of the Physionotrace—a device that once marked the shift from artistic subjectivity to mechanical precision and standardization—into the contemporary revolution of AI-generated imagery, where the act of depiction evolves from representing the visible world to recomposing the virtual one through algorithmic computation.

這件作品試圖用「來自真實世界的雜訊」來取代傳統AI所使用的電腦亂數表,讓AI所運作的虛擬潛空間,能與人類所處的物理世界建立起某種連結。並將18世紀末側面描繪儀代表的歷史意義——見證影像從藝術家的主觀詮釋,邁向「準確化、標準化」的機器觀看方式的影像革命,進一步延伸至AI生成影像從可見世界的描摹到虛擬世界重組演算的再次革命。

![]()

![]()

![]()

![]()

每天描繪儀會從雜亂至清晰,依序繪製出8張側面肖像剪影。

Operation Steps

Galton Board: Generates a Gaussian distribution through physical processes in the real world.

Sampling: Samples a specified number of pixels based on this Gaussian distribution.

Arrangement: Arranges the sampled pixels into a two-dimensional Gaussian noise map.

Transformation: Converts the Gaussian noise map into a latent-space noise map.

Generation: Uses this transformed noise map as the added noise required in each step of the diffusion-based image generation process.

Hatching: Extracts the contours of the generated portrait and fills them with lines.

Physionotrace: Displays the image on the modified Physionotrace screen; a drawing machine traces the image and reproduces it as a physical drawing through a linkage mechanism.

運作步驟

Galton Board: 在物理世界,製造出一組高斯分布

Sampling:以此高斯分布取樣出指定數量的像素

Arrangement:將這些像素排列成二維的高斯噪聲圖

Transformation:將高斯噪聲圖轉換成潛空間的噪聲圖

Generation:將此轉換後的噪聲圖作為生成的step中所需加入的雜訊

Hatching:找出生成的肖像圖的輪廓,並用線條填充

Physionotrace:將影像顯示於側面描繪儀的螢幕上,以繪圖機描繪影像,並透過連桿繪製實體畫作

Special Thanks

Lin & Lin Gallery

Huang Hao-Min — Installation Design and Production

特別感謝

大未來林舍畫廊

黃浩旻 裝置設計製作

Mechanical Installation, Generative AI Algorithms, Real-Time Animation, Mixed Media

Dimensions Variable

2025

潛像描繪儀

機械裝置、機器繪圖、演算法、生成動畫、複合媒材

依場地而定

2025

︎ Magic Noise: How AI Wanders in the Latent Space, LIN & LIN Gallery

︎ 神奇的雜訊:AI的潛在漫步方式,大未來林舍畫廊

展場入口處為《潛像描繪儀》畫出的肖像畫

In recent years, the Diffusion Model has become the state of the art (SOTA) in image generation systems. It improves upon earlier “one-step” generative methods by dividing the process into a series of smaller steps. At each step, instead of directly using the model’s computed output, the algorithm randomly selects a nearby result within a certain range. Both experimental data and visual outcomes demonstrate that this modification has brought about a major breakthrough. A similar design principle is also found in large language models.

The generated output exists as a vector in latent space—essentially, a coordinate. Randomly selecting another coordinate nearby corresponds to diffusing outward from the original point with a Gaussian probability distribution: the closer a point is, the higher its probability; the farther it is, the lower. This process, known as adding noise, gives the diffusion model its name.

In visualizations of models like Stable Diffusion or Midjourney, the process is often represented as “denoising.” However, the true logic that allows AI to approach something resembling creativity lies precisely in the step of adding noise. This is the main reason—and necessity—behind why generative AI produces different results each time. One can imagine this process as AI taking a wandering exploration through latent space, step by step, during its generative journey.

This work consists of three installations: a Galton board, an AI image generation algorithm, and a Physionotrace.

近幾年成為影像生成模型SOTA(State of The Art,意指某特定時刻下,某技術、設備或科學領域所達到的最高發展水平或最高級狀態)的Diffusion Model(擴散模型),改良了過去「一步到位」的生成方式,把生成的過程拆成了若干個小step,並且在每一個步驟的運算中,並不直接採用模型運算出來的結果,而是在該結果的「附近」具有隨機性地選擇另一個結果。不論是實驗數據或是肉眼可見的結果,皆表明此改良取得躍升性突破。不僅是影像生成模型,大語言模型中也有類似的設計。

生成出來的結果是一個「潛空間」(Latent Space)的「向量」(可以理解成座標)。在「附近」隨機選擇另一個座標,則為從原本的座標以高斯機率分布「擴散」出去,離原本結果越近機率越高,越遠越小,這個步驟就是所謂的「加雜訊」,也就是「擴散模型」的命名由來。

在stable diffution或是midjourney生成過程的視覺化中,多半是以「降噪」的方式呈現,然而真正讓AI有可能可以往「創造力」討論的演算邏輯,其實「加入雜訊」的這個步驟,這也是生成式AI「為甚麼」且「必須」每次生成結果不一樣的主要原因。可以把這個步驟,想像成AI在一步一步的生成step中,在潛空間裡的漫步與探索。

本作品由三組裝置組成:道爾頓板、AI影像生成演算法、側面描繪儀(Physionotrace)。

道爾頓板動力裝置,產生常態分布

道爾頓板動力裝置,產生常態分布The work begins with a Galton board, which generates a Gaussian distribution—a type of random distribution originating from real-world physical behavior. This naturally occurring randomness is then used to calculate the Gaussian noise required in the iterative process of an AI image generation model (Diffusion Model). The model follows this data to navigate through latent space, producing half-length profile portraits.

作品首先透過道爾頓板,產生來自真實物理行為的高斯分布(Gaussian Distribution,也就是常態分布)——這是一種源自現實世界的隨機分布。並依此計算出AI影像生成模型(Diffusion Model)在生成的疊代過程中所需的高斯雜訊,引導AI在潛空間(Latent Space)中進行探索,生成半身側面剪影的肖像畫。

生成半身側面剪影的肖像畫

These AI-generated portraits are displayed through a modified Physionotrace, a device originally invented in the late 18th century to trace human silhouettes. In this work, the tracing paper is replaced by an LCD screen stripped of its backlight and polarizing film, while the traditional light source for casting shadows is replaced by the screen’s backlight—a connection to the virtual world. The tracing hand is no longer human but mechanical, using a linkage mechanism to reproduce the AI-generated portraits onto real paper. Each day, the machine draws eight profile silhouettes, moving gradually from chaos to clarity.

這些AI生成的肖像,顯示在一台改造的側面描繪儀上。側面描繪儀原為18世紀末用來描繪人像輪廓的裝置,我們將描圖紙替換成移除了背光板與偏光膜的液晶螢幕,並把傳統用來打出剪影的光源,替換成連結虛擬世界的「螢幕背光」。描圖的手也不再是人手,而是機械手臂,透過連桿機構,把螢幕上的AI肖像描繪到實體紙上。每天這台機器會從雜亂至清晰,依序繪製出8張側面肖像剪影。

《潛像描繪儀》繪置側面肖像剪影

By using noise derived from the physical world instead of the computer’s pseudo-random number tables traditionally used in AI, the work establishes a link between the virtual latent space in which AI operates and the tangible physical world humans inhabit. It extends the historical significance of the Physionotrace—a device that once marked the shift from artistic subjectivity to mechanical precision and standardization—into the contemporary revolution of AI-generated imagery, where the act of depiction evolves from representing the visible world to recomposing the virtual one through algorithmic computation.

這件作品試圖用「來自真實世界的雜訊」來取代傳統AI所使用的電腦亂數表,讓AI所運作的虛擬潛空間,能與人類所處的物理世界建立起某種連結。並將18世紀末側面描繪儀代表的歷史意義——見證影像從藝術家的主觀詮釋,邁向「準確化、標準化」的機器觀看方式的影像革命,進一步延伸至AI生成影像從可見世界的描摹到虛擬世界重組演算的再次革命。

每天描繪儀會從雜亂至清晰,依序繪製出8張側面肖像剪影。

Operation Steps

Galton Board: Generates a Gaussian distribution through physical processes in the real world.

Sampling: Samples a specified number of pixels based on this Gaussian distribution.

Arrangement: Arranges the sampled pixels into a two-dimensional Gaussian noise map.

Transformation: Converts the Gaussian noise map into a latent-space noise map.

Generation: Uses this transformed noise map as the added noise required in each step of the diffusion-based image generation process.

Hatching: Extracts the contours of the generated portrait and fills them with lines.

Physionotrace: Displays the image on the modified Physionotrace screen; a drawing machine traces the image and reproduces it as a physical drawing through a linkage mechanism.

運作步驟

Galton Board: 在物理世界,製造出一組高斯分布

Sampling:以此高斯分布取樣出指定數量的像素

Arrangement:將這些像素排列成二維的高斯噪聲圖

Transformation:將高斯噪聲圖轉換成潛空間的噪聲圖

Generation:將此轉換後的噪聲圖作為生成的step中所需加入的雜訊

Hatching:找出生成的肖像圖的輪廓,並用線條填充

Physionotrace:將影像顯示於側面描繪儀的螢幕上,以繪圖機描繪影像,並透過連桿繪製實體畫作

Special Thanks

Lin & Lin Gallery

Huang Hao-Min — Installation Design and Production

特別感謝

大未來林舍畫廊

黃浩旻 裝置設計製作