Exploration and Exploitation

AI Interactive Video

Dimension Variable

2024

探索與剝削

AI互動錄像

依場定而定

2024

︎Ars Electronica Festival 2024

︎林茲電子藝術節 2024

![]()

![]()

![]()

作品於林茲電子藝術節展出( 攝影:凹焦影像工作室,圖片:國美館提供 )

Does AI exacerbate social divisions when faced with the dilemma of exploring and exploiting humans? The rise of social media has changed the way and direction of information flow. As AI Recommendation algorithms deliver information in a pandering way, it seemingly increases the opportunity to access more information. However, does it also make it difficult for individuals with different standpoints to receive each other's perspectives, thus leading to an echo chamber? Are content creators in pursuit of reach, affecting the content they create?

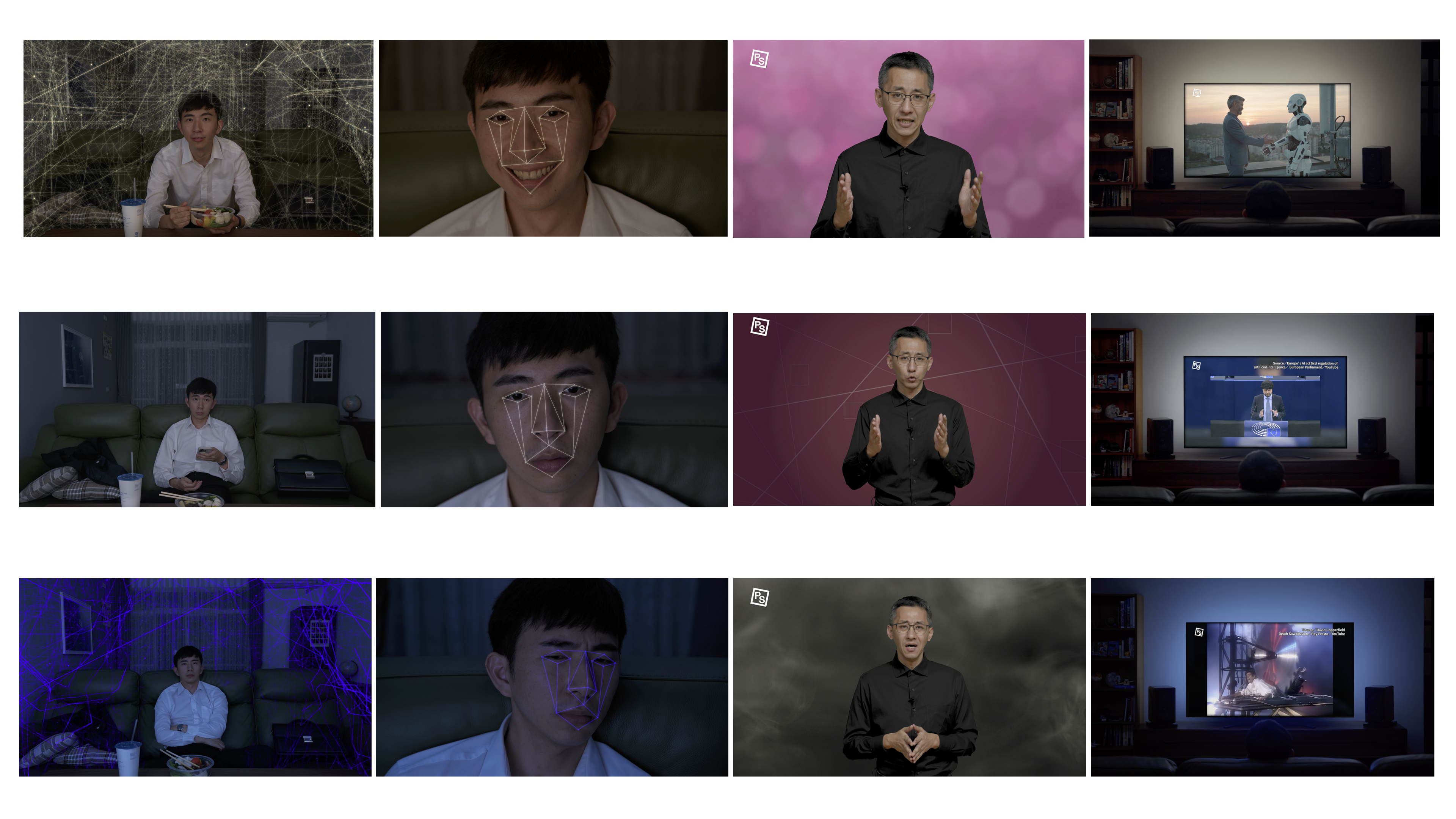

We have developed an AI interactive video system that utilizes multiple cameras installed at the exhibition venue. The system detects the audience's facial emotions through a AI facial emotion recognition system. Based on the detected emotions, the video branches into different narrative branches.

The video content portrays an office worker returning home after work and watching a YouTube video recommended by the algorithm. The YouTube video is from an educational channel introducing the topic of "virtual companions." The audience's emotions—"positive," "neutral," or "negative"—correspond to three different perspectives in the introduction: "tech optimism," "scientific explanation," and "pessimistic warnings," respectively.

當AI面臨探索和剝削人類的兩難境地下,是否加劇了社會分裂? 社交媒體的崛起改變了信息流動的方式和方向。隨著 AI 推薦算法以迎合的方式傳遞信息,它似乎增加了接觸更多信息的機會。然而,這是否也使得持不同立場的個體難以接收彼此的觀點,從而導致同溫層的形成?內容創作者是否因追求傳播範圍而影響了他們創作的內容?

我們開發了一個AI互動錄像系統,在展場架設多台攝影機,以AI人臉情緒辨識系統偵測觀眾在觀看時臉部的情緒,跟AI推薦系統演算法一樣,以一種「投其所好」的邏輯,選擇播放的觀點。

影片內容為一名上班族下班回到家,點選推薦演算法推薦的Youtube影片觀看,Youtube影片為一個知識型Youtube頻道以「虛擬伴侶」為題的介紹影片,觀眾的「正面」、「中性」、「負面」分別對應到對於到「科技樂觀主義」、「科普介紹」、「悲觀示警」等三種不同介紹觀點。

![]()

The YouTube video collaborated with PanSci to record three different introductory perspectives: positive, neutral, and negative, presented with varying color temperatures and tones. By detecting the facial emotions of viewers while watching, the video adjusts its branching based on their emotional responses.

Youtube影片與泛科學合作,錄製了正面、中性、負面三種不同的介紹觀點, 以不同的色溫與色調呈現。透過偵測現場觀眾觀看時的人臉情緒,並依據此情緒改變影片的分支。

![]()

![]()

![]()

作品於林茲電子藝術節展出(攝影:凹焦影像工作室,圖片:國美館提供)

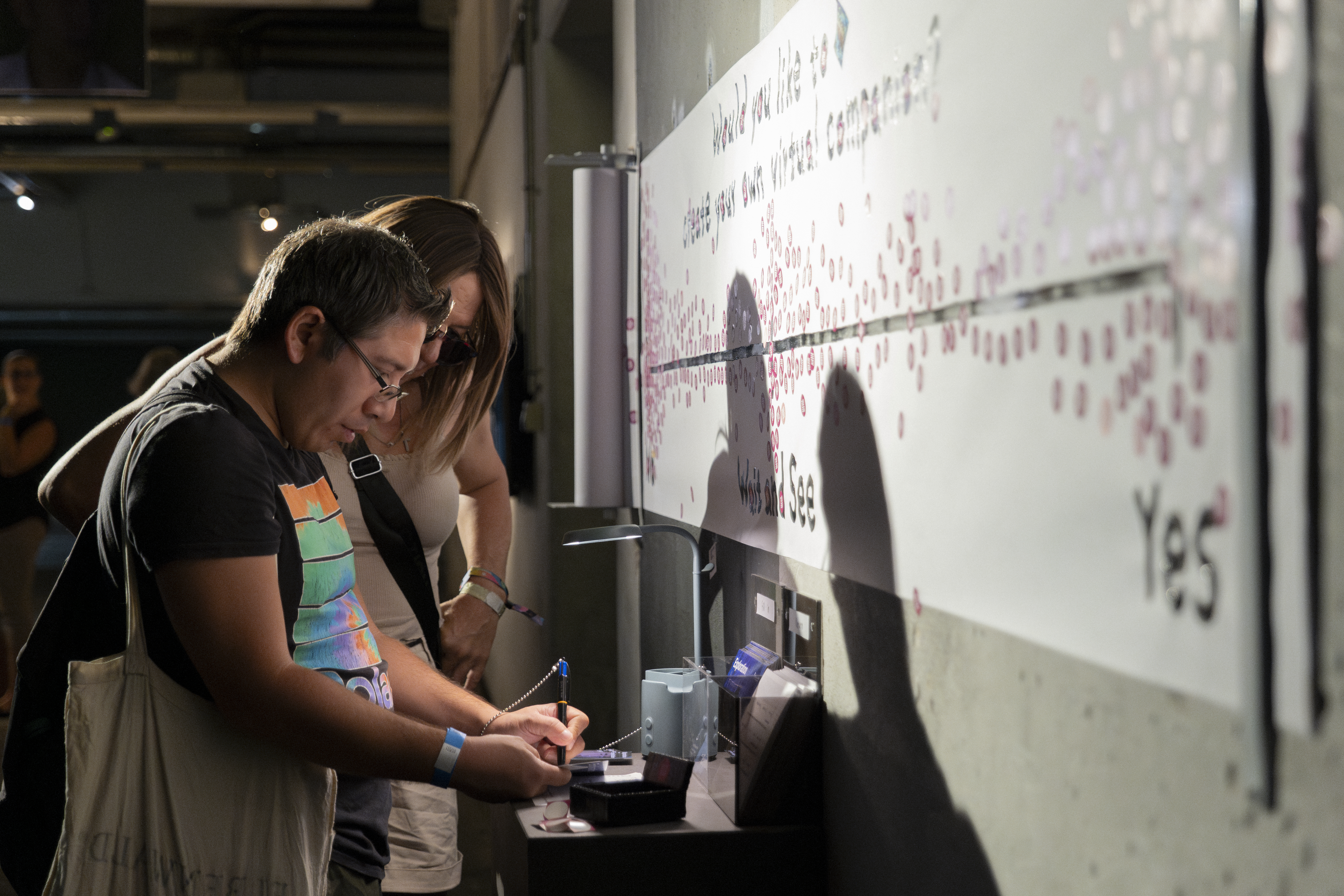

A message board was set up at the exhibition, allowing viewers to respond to the questions posed in the video and express their viewpoints after watching.

展場設置了留言板,觀眾看完影片後可以回應影片中的提問並表達觀點。

![]() Video branching diagram

Video branching diagram

影片分支示意

︎This project uses the following open-source libraries:

onnxruntime - Licensed under the MIT License.

fastapi - Licensed under the MIT License.

screeninfo - Licensed under the MIT License.

hsemotion-onnx - Licensed under the Apache-2.0 License.

onnx - Licensed under the Apache-2.0 License.

opencv-python - Licensed under the Apache-2.0 License.

pymongo - Licensed under the Apache-2.0 License.

numpy - Licensed under the BSD-3-Clause License.

uvicorn - Licensed under the BSD-3-Clause License.

︎ Github: https://github.com/Shanboy5566/hsemotion-onnx

This work was co-produced by National Taiwan Museum of Fine Arts & Ars Electronica in 2024, with support from the Ministry of Culture (Taiwan), R.O.C., under the Technology Art Venues Innovation Project and the Taiwan Content Plan.

本作品由文化部「藝文場館科藝創新計畫」和「匯聚臺流文化黑潮計畫」支持,並由國立臺灣美術館與林茲電子藝術節共同製作。

導演:陽春麵研究舍

執行導演:丁啟文

場記:陳姿尹

編劇:丁啟文

編劇助理:謝富丞 、陽春麵研究舍

製作人:陽春麵研究舍

共同製片人:林茲電子藝術節、國立台灣美術館

行政製片人:邱誌勇、賴駿杰、Laura Welzenbach、Emiko Ogawa、Lisa Shchegolkova

協同製片人:泛科學

AI互動系統開發:陳宣伯 、莊向峰

演員:洪德高 、鄭國威

攝影:廖鏡文

燈光:梁敦學

收音:顏英琪

剪輯:陳姿尹、莊向峰

調光:莊向峰

視覺特效:黃詣翔、陳仲威

聲音指導:陳奎瀚

字幕翻譯:戴思博

平面設計:周芳伃

特別感謝:沈伯丞

AI Interactive Video

Dimension Variable

2024

探索與剝削

AI互動錄像

依場定而定

2024

︎Ars Electronica Festival 2024

︎林茲電子藝術節 2024

作品於林茲電子藝術節展出( 攝影:凹焦影像工作室,圖片:國美館提供 )

Does AI exacerbate social divisions when faced with the dilemma of exploring and exploiting humans? The rise of social media has changed the way and direction of information flow. As AI Recommendation algorithms deliver information in a pandering way, it seemingly increases the opportunity to access more information. However, does it also make it difficult for individuals with different standpoints to receive each other's perspectives, thus leading to an echo chamber? Are content creators in pursuit of reach, affecting the content they create?

We have developed an AI interactive video system that utilizes multiple cameras installed at the exhibition venue. The system detects the audience's facial emotions through a AI facial emotion recognition system. Based on the detected emotions, the video branches into different narrative branches.

The video content portrays an office worker returning home after work and watching a YouTube video recommended by the algorithm. The YouTube video is from an educational channel introducing the topic of "virtual companions." The audience's emotions—"positive," "neutral," or "negative"—correspond to three different perspectives in the introduction: "tech optimism," "scientific explanation," and "pessimistic warnings," respectively.

當AI面臨探索和剝削人類的兩難境地下,是否加劇了社會分裂? 社交媒體的崛起改變了信息流動的方式和方向。隨著 AI 推薦算法以迎合的方式傳遞信息,它似乎增加了接觸更多信息的機會。然而,這是否也使得持不同立場的個體難以接收彼此的觀點,從而導致同溫層的形成?內容創作者是否因追求傳播範圍而影響了他們創作的內容?

我們開發了一個AI互動錄像系統,在展場架設多台攝影機,以AI人臉情緒辨識系統偵測觀眾在觀看時臉部的情緒,跟AI推薦系統演算法一樣,以一種「投其所好」的邏輯,選擇播放的觀點。

影片內容為一名上班族下班回到家,點選推薦演算法推薦的Youtube影片觀看,Youtube影片為一個知識型Youtube頻道以「虛擬伴侶」為題的介紹影片,觀眾的「正面」、「中性」、「負面」分別對應到對於到「科技樂觀主義」、「科普介紹」、「悲觀示警」等三種不同介紹觀點。

The YouTube video collaborated with PanSci to record three different introductory perspectives: positive, neutral, and negative, presented with varying color temperatures and tones. By detecting the facial emotions of viewers while watching, the video adjusts its branching based on their emotional responses.

Youtube影片與泛科學合作,錄製了正面、中性、負面三種不同的介紹觀點, 以不同的色溫與色調呈現。透過偵測現場觀眾觀看時的人臉情緒,並依據此情緒改變影片的分支。

作品於林茲電子藝術節展出(攝影:凹焦影像工作室,圖片:國美館提供)

A message board was set up at the exhibition, allowing viewers to respond to the questions posed in the video and express their viewpoints after watching.

展場設置了留言板,觀眾看完影片後可以回應影片中的提問並表達觀點。

影片分支示意

︎This project uses the following open-source libraries:

onnxruntime - Licensed under the MIT License.

fastapi - Licensed under the MIT License.

screeninfo - Licensed under the MIT License.

hsemotion-onnx - Licensed under the Apache-2.0 License.

onnx - Licensed under the Apache-2.0 License.

opencv-python - Licensed under the Apache-2.0 License.

pymongo - Licensed under the Apache-2.0 License.

numpy - Licensed under the BSD-3-Clause License.

uvicorn - Licensed under the BSD-3-Clause License.

︎ Github: https://github.com/Shanboy5566/hsemotion-onnx

This work was co-produced by National Taiwan Museum of Fine Arts & Ars Electronica in 2024, with support from the Ministry of Culture (Taiwan), R.O.C., under the Technology Art Venues Innovation Project and the Taiwan Content Plan.

本作品由文化部「藝文場館科藝創新計畫」和「匯聚臺流文化黑潮計畫」支持,並由國立臺灣美術館與林茲電子藝術節共同製作。

導演:陽春麵研究舍

執行導演:丁啟文

場記:陳姿尹

編劇:丁啟文

編劇助理:謝富丞 、陽春麵研究舍

製作人:陽春麵研究舍

共同製片人:林茲電子藝術節、國立台灣美術館

行政製片人:邱誌勇、賴駿杰、Laura Welzenbach、Emiko Ogawa、Lisa Shchegolkova

協同製片人:泛科學

AI互動系統開發:陳宣伯 、莊向峰

演員:洪德高 、鄭國威

攝影:廖鏡文

燈光:梁敦學

收音:顏英琪

剪輯:陳姿尹、莊向峰

調光:莊向峰

視覺特效:黃詣翔、陳仲威

聲音指導:陳奎瀚

字幕翻譯:戴思博

平面設計:周芳伃

特別感謝:沈伯丞